As a senior UX designer, you’ve likely started working with products that incorporate generative AI chatbots, content assistants, image-creators, etc. One big challenge: these models sometimes produce outputs that are plausible-looking but false. These are often called hallucinations. (Nielsen Norman Group) It’s important for you to recognise how hallucinations impact design, user trust and product experience and what you can do about them.

What are AI hallucinations?

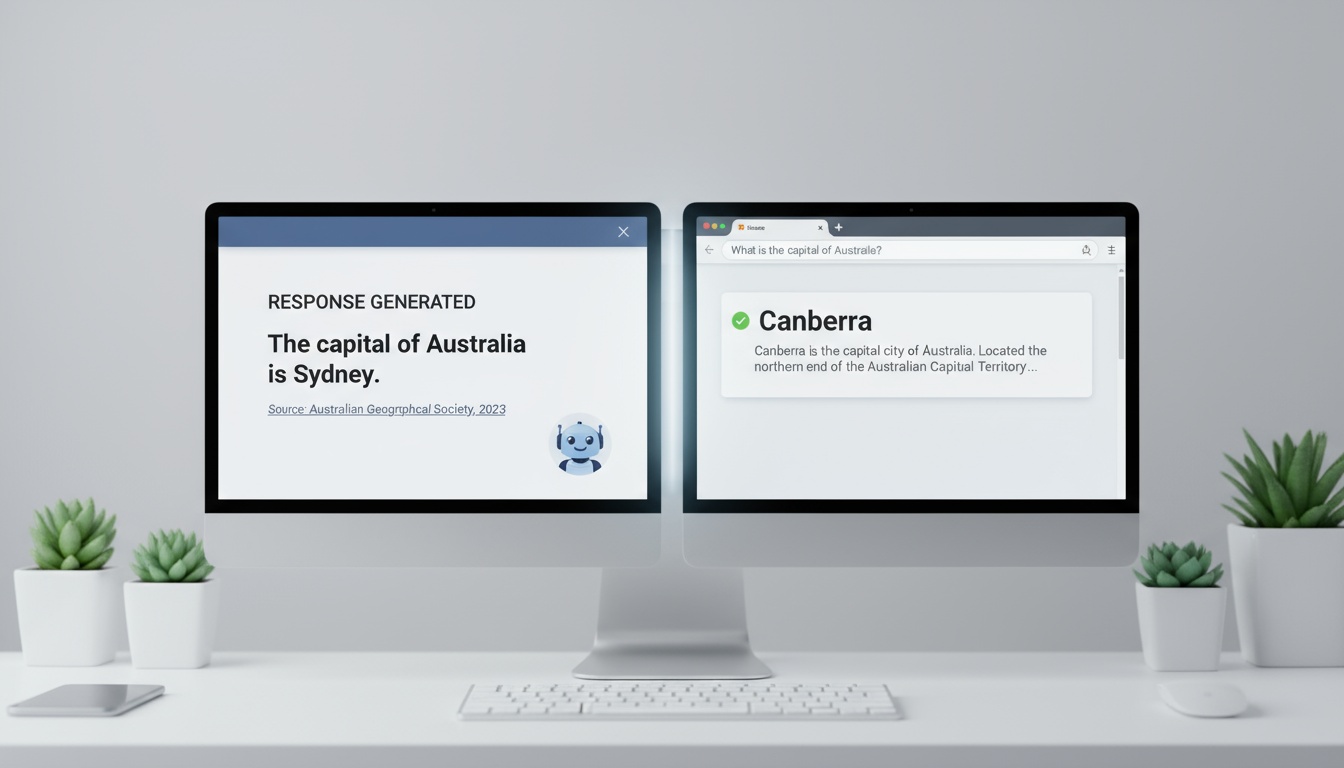

A hallucination happens when an AI model generates output that seems believable but is incorrect or nonsensical. (Nielsen Norman Group)

- Examples: A language model confidently giving a fabricated quote. An image generator placing extra limbs on a person.

- Key point: The model may not know it’s wrong because it’s not designed to “know” truth in human terms.

Why do hallucinations happen?

- These models are essentially “next-word predictors”: they look at huge volumes of text (or pixels), then pick what seems statistically likely to follow.

- Their training data is massive and messy: accurate and inaccurate info, jokes, satire, trolls all mixed. That means “garbage in” can lead to “garbage out”.

- Because they don’t have built-in fact-checking or a sense of meaning in the way humans do, they can output confidently wrong information.

Why this matters for UX designers

- If the AI in your product outputs a wrong statement with high confidence, users may trust it and then be misled. That erodes trust in your product.

- You design the interface and interaction around AI so you get to decide how “unreliable” outputs are surfaced or mitigated.

- The user experience must account for uncertainty, transparency, and verification. If you ignore hallucination risk, you risk bad UX and even harm (especially in high-stakes domains).

What you can do: Design choices to manage hallucinations

Here are actionable design patterns you can embed in your product to mitigate risk and improve user experience:

-

Use language that signals uncertainty

- For low-confidence outputs, the AI (or the interface) should open with phrases like “I’m not completely sure, but…” rather than definitive statements.

- This helps set correct user expectations: “This may be wrong, so check me.”

- Avoid generic disclaimers in tiny font. For example, don’t bury “may be inaccurate” in a footnote. Make it part of the UX. (Nielsen Norman Group)

-

Show confidence or reliability indicators

- If the model or system can, display a confidence rating (e.g., High / Medium / Low) or a percentage. This lets users judge how much to trust the output. (Nielsen Norman Group)

- If precise numbers aren’t possible, at least convey categories or warnings.

- Be careful: a moderate percentage can still lead users to over‐trust. Design with caution.

-

Expose sources or allow verification

- Provide links or references that back up the AI’s answer. That supports users who want to dig deeper.

- Example: After the AI states a fact, show “Based on 3 sources: [link1], [link2], [link3]”.

- This also signals: “I may be wrong check me.”

- But be mindful: if users assume references = truth always, you might create a false sense of security.

-

Show multiple responses or highlight inconsistency

- One technique: generate multiple answers to the same prompt behind the scenes and compare. If there’s inconsistent output, flag it.

- In the UI, you might show: “Here are three possible answers; they differ.” Then let the user decide.

- This helps surface uncertainty and avoids presenting one “confident” answer that may be wrong.

Design-thinking questions for your next project

As you start using generative AI features in your product, ask yourself:

- In what contexts would an incorrect output cause serious harm (legal, health, financial, reputational)?

- How will I signal uncertainty without undermining the usefulness of the feature?

- How can I allow users to verify the information provided by the AI, and how obvious is that path?

- If the AI output is wrong, how will the UI let the user correct or flag it?

- Where should I balance automation and human in the loop, to reduce risk of hallucination impacting users?

Final thoughts

Working with AI doesn’t mean pretending it’s perfect. As a UX designer you can build experiences that acknowledge the limits of generative models and thus earn user trust. By designing for uncertainty, enabling verification, and managing expectations, you create a stronger, more resilient user experience. Use the patterns above to keep your design human-centric, realistic, and reliable.

This article originally appeared on lightrains.com

Leave a comment

To make a comment, please send an e-mail using the button below. Your e-mail address won't be shared and will be deleted from our records after the comment is published. If you don't want your real name to be credited alongside your comment, please specify the name you would like to use. If you would like your name to link to a specific URL, please share that as well. Thank you.

Comment via email